This article is to show an example of how to manage NSX-T firewall rules as a code through Terraform. You can find the project on my github account : nsxt-frac-tf-cm and nsxt-frac-tf-rm

I will describe the structure of the project, how it works, the data model, the Terraform code explanation and finish with an example.

Structure of the project

The diagram below shows a summary of how I organized the project in order to fully use infrastructre as code.

Below is the file structure

#Child modules

├── nsxt-frac-tf-cm

├── nsxt-tf-cm-dfw

│ ├── main.tf

│ ├── outputs.tf

│ └── variables.tf

├── nsxt-tf-cm-grp

│ ├── main.tf

│ ├── outputs.tf

│ └── variables.tf

└── nsxt-tf-cm-svc

├── main.tf

├── outputs.tf

└── variables.tf

#Root modules

├── nsxt-frac-tf-rm

├── nsxt-tf-rm-dfw

│ ├── main.tf

│ ├── provider.tf

│ ├── terraform.tfvars

│ └── variables.tf

└── nsxt-tf-rm-grpsvc

├── main.tf

├── outputs.tf

├── provider.tf

├── terraform.tfvars

└── variables.tf

In short, child modules are the logic, root modules are the variables.

You can then duplicate the root module according to the NSX-T environment you want to deploy and start using the variables for the environment.

How it works

Child Module

You can find the logics and the code complexity in the Terraform child modules.

They will create the NSX-T resources. They allow to centralize the complexity. If you need to change your logic, you only need to modify the child module.

After the modification, from the root module you only need to pull the new modifications by reinitializing or refreshing the modules with “terraform init” and then you can start using the new features.

There are tree child modules:

- nsxt-tf-cm-svc

This is for the NSX-T (TCP/UDP/IP) services creation - nsxt-tf-cm-grp

This is for the NSX-T (TAG and IP) groups creation - nsxt-tf-cm-dfw

This is for the NSX-T policy and rules creation

Root Module

It will call the child module with the variables associated with the NSX-T environment. You need to create a repository per environment. The only modifications you need to do is to change the variables.

The root module can be declined to different NSX-T environments/deployments as long as the variable structure used in the root module respects the child module data model.

Root modules are separated in two :

nsxt-tf-rm-grpsvc

This module will call the groups and the services child module an pass them the groups and services’s map variables.

As the groups and services can be centralized and be the same for all environments, I have prefered to managed them separatly from the policies and rules.

nsxt-tf-rm-dfw

This is the policies and rules definition. This root module will use the terraform state output section of the root module nsxt-tf-rm-grpsvc to get all the groups and services needed.

The output.tf file is defined in nsxt-tf-rm-grpsvc root module.

Data model

To have more details of the possible attributes used, you can refer to the NSX-T Terraform official documentation.

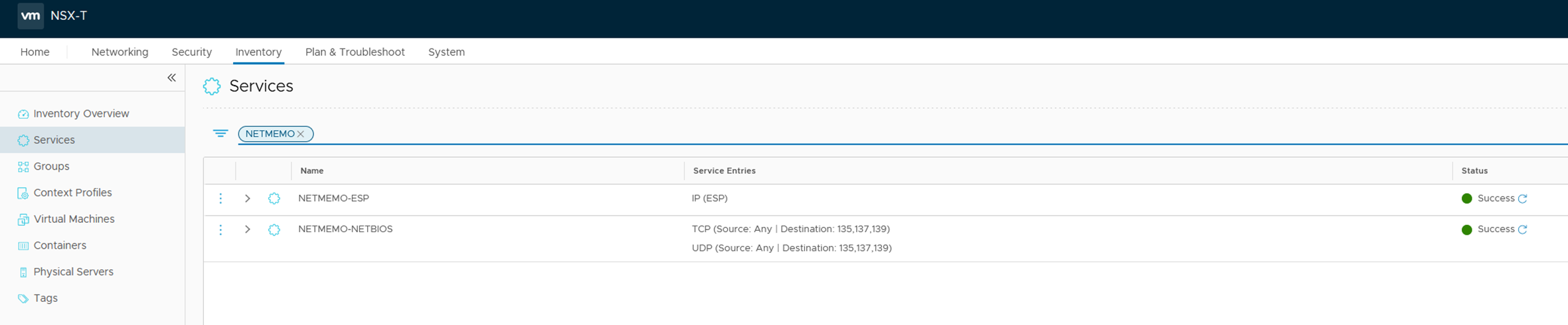

Services

map_svc

This is the map variable passed to the child module.

- This variable is a map of services map where the name is the service name

- Every service name contains lists.

- Every list name is the service type (IP, TCP or UDP).

- Every list is either a protocol number if the list is IP or port number if the list is TCP/UDP

map_svc = {

NETMEMO-ESP = { IP = ["50"] }

NETMEMO-NETBIOS = { TCP = ["135","137","139","139"], UDP = ["135","137","139","139"] }

}

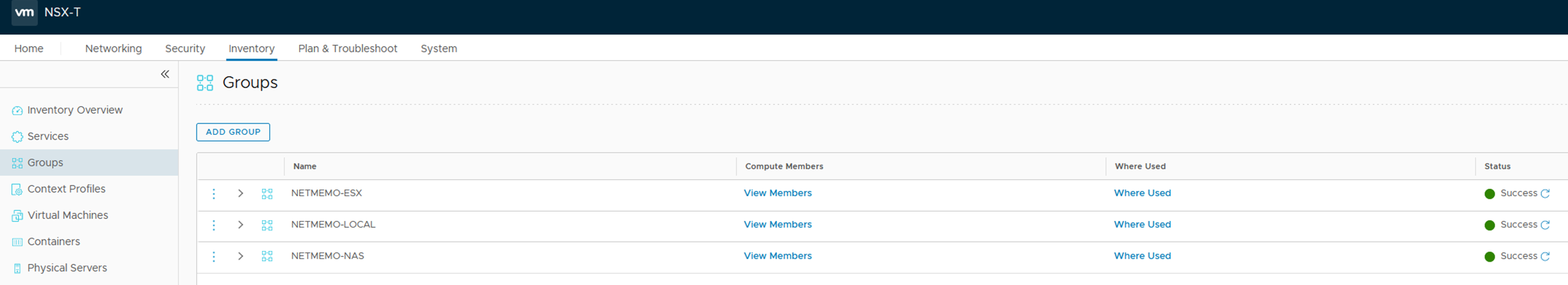

Groups

map_grp

This is the map variable passed to the child module.

- This variable is a map of groups map where the name is the group name.

- Every group name contains lists.

- Every list name is the group type (IP or TAG).

- Every list is either subnet if the list is IP or tag name if the list is TAG

map_grp = {

NETMEMO-LOCAL = { IP = ["10.0.0.0/25","10.1.1.0/25"] }

NETMEMO-NAS = { TAG = ["NAS","FILES"] }

NETMEMO-ESX = { TAG = ["ESX","VMWARE"] }

}

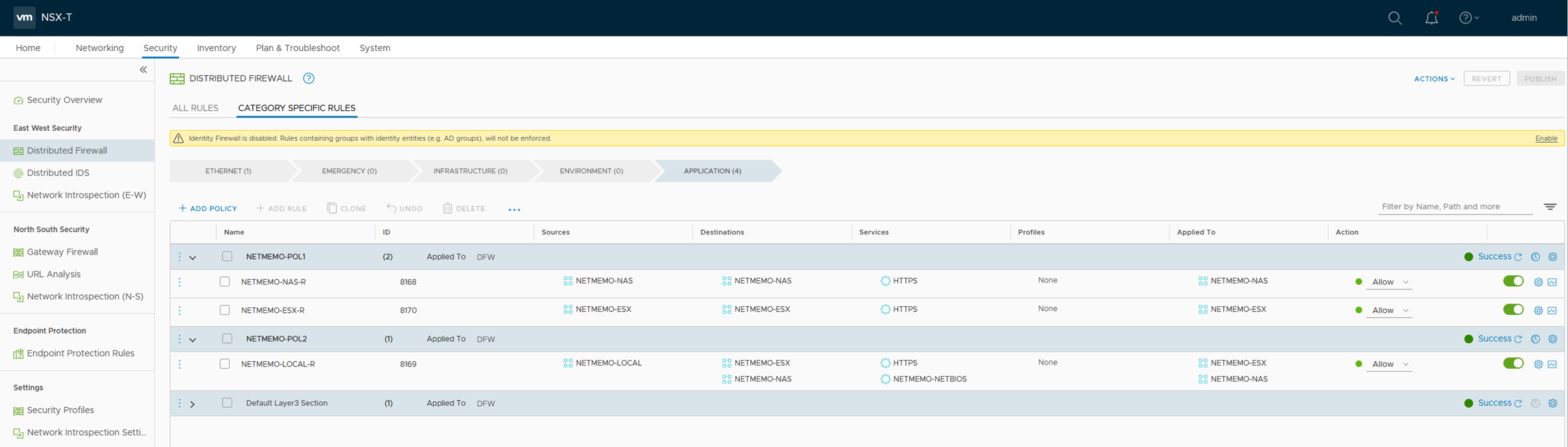

Policies

map_policies

This is the map variable passed to the child module.

- This variable is a map of policy map where the name is the policy name.

- Every policy name contains different attributes that are either string or map. The map named “rules” is to define the rules.

- Every “rules” map contains maps to define rules. Every map is a rule.

- Every rule map contains stings and list attrubutes (sources,destinations,services,scope)

map_policies = {

NETMEMO-POL1 = {

category = "Application"

sequence_number = "10"

rules = {

netmemo-rule1 = {

display = "NETMEMO-NAS-R"

sources = ["NETMEMO-NAS"]

destinations = ["NETMEMO-NAS"]

services = ["HTTPS"]

scope = ["NETMEMO-NAS"]

action = "ALLOW"

disabled = "false"

}

netmemo-rule2 = {

display = "NETMEMO-ESX-R"

sources = ["NETMEMO-ESX"]

destinations = ["NETMEMO-ESX"]

services = ["HTTPS"]

scope = ["NETMEMO-ESX"]

action = "ALLOW"

disabled = "false"

}

}

}

NETMEMO-POL2 = {

category = "Application"

sequence_number = "20"

rules = {

netmemo-rule1 = {

display = "NETMEMO-LOCAL-R"

sources = ["NETMEMO-LOCAL"]

destinations = ["NETMEMO-NAS","NETMEMO-ESX"]

services = ["NETMEMO-NETBIOS","HTTPS"]

scope = ["NETMEMO-NAS","NETMEMO-ESX"]

action = "ALLOW"

disabled = "false"

}

}

}

}

Code Explanation

Root Module

nsxt-tf-cm-grp and nsxt-tf-cm-svc child modules

These child modules use nested for_each with conditional nested dynamic.

A brief example of the nested for_each used with dynamic can be found here. The conditional behavior is done thanks to the filter of the for loop. You can find the documentation on this link.

Dynamic terraform blocks allow to create a block for all the elements in the map you give to the for_each loop. In our child modules, the element of the dynamic for_each loop are also filtered with a for loop and a if.

If the map contains a TAG attribute we create the criteria block with the TAG attributes.

dynamic "criteria" { #The for_each contains a for loop with filter to create the criteriia only if there is a list with the name TAG for_each = { for key,val in each.value : key => val if key == "TAG" } content { dynamic "condition" { #looping over the set to create every tags for_each = criteria.value content { key = "Tag" member_type = "VirtualMachine" operator = "EQUALS" value = condition.value } } } }If the map contains a IP attribute we create the criteria block with the IP attributes.

dynamic "criteria" { #The for_each contains a for loop with filter to create the criteriia only if there is a list with the name IP for_each = { for key,val in each.value : key => val if key == "IP" } content { ipaddress_expression { ip_addresses = criteria.value } } }

The same logic is used to create services.

If the map contains a TCP or UDP attribute we create the l4_port_set_entry block with the TCP/UDP attributes.

dynamic "l4_port_set_entry" { #each.value = map of TCP,UDP or IP list where l4_port_set_entry.key will be TCP or UDP #the for_each contains a for loop with filter to create the l4_port_set_entry only if there is a list with TCP or UDP as name for_each = { for key,val in each.value : key => val if key == "TCP" || key == "UDP" } content { display_name = "${l4_port_set_entry.key}_${each.key}" protocol = l4_port_set_entry.key destination_ports = l4_port_set_entry.value } }If the map contains a IP attribute we create the ip_protocol_entry block with the IP attributes.

dynamic "ip_protocol_entry" { #each.value = map of TCP,UDP or IP list #the for_each contains a for loop with filter to create the ip_protocol_entry only if there is a list with IP as name for_each = { for key,val in each.value : key => val if key == "IP" } content { #[0] because the ip protocol will have a single IP protocol value in the set and the protocol attribut expect a number not a set protocol = ip_protocol_entry.value[0] } }

nsxt-tf-cm-dfw child modules

The complexity of this module is to get the NSX-T “Path” attributes of the groups and services with their name defined in their respective map variable. We want the map variables definition of the policies and rules to be as much friendly as possible and not dependant of values specific to the NSX-T implementation (path). This will also allow us to reuse the policies variables values in other environments if the rules are generic for instance.

In order to retreive the value we are looping over the “list” in the group or service attribute and “try” to get the path attribute.

source_groups = [for x in rule.value["sources"] : try(var.nsxt_policy_grp_grp[x].path)]

Child Module

nsxt-tf-rm-grpsvc

This is how to use a child module stored in git within a root module.

module "nsxt-tf-cm-svc" {

source = "git::https://github.com/netmemo/nsxt-frac-tf-cm.git//nsxt-tf-cm-svc"

map_svc = var.map_svc

}

Below is the code of the output.tf file that adds the groups and service maps into the terraform.state output section. It will allow other root modules to use these variables as terraform_remote_state datasource.

output "grp" {

value = module.nsxt-tf-cm-grp.grp

}

output "svc" {

value = module.nsxt-tf-cm-svc.svc

}

nsxt-tf-rm-dfw

Below is the code to refer to a remote state, in this case the remote state is local.

data "terraform_remote_state" "grpsvc" {

backend = "local"

config = {

path = "../nsxt-tf-rm-grpsvc/terraform.tfstate"

}

}

Demo

Requirement:

- Terraform 0.14+

- NSX-T 3.0.2+

- Clone the root module nsxt-frac-tf-rm

To make a test, you need to follow the steps below (clic to see the detail):

1. Clone the root module repo

git clone git@github.com:netmemo/nsxt-frac-tf-rm.git

Cloning into 'nsxt-frac-tf-rm'...

remote: Enumerating objects: 21, done.

remote: Counting objects: 100% (21/21), done.

remote: Compressing objects: 100% (15/15), done.

remote: Total 21 (delta 7), reused 20 (delta 6), pack-reused 0

Receiving objects: 100% (21/21), done.

Resolving deltas: 100% (7/7), done.

2.Move to the cloned repo

cd your directory

3.Initialize terraform

terraform init

Initializing modules...

Downloading git::https://github.com/netmemo/nsxt-frac-tf-cm.git for nsxt-tf-cm-dfw...

- nsxt-tf-cm-dfw in .terraform/modules/nsxt-tf-cm-dfw/nsxt-tf-cm-dfw

Initializing the backend...

Initializing provider plugins...

- terraform.io/builtin/terraform is built in to Terraform

- Finding vmware/nsxt versions matching ">= 3.1.1"...

- Installing vmware/nsxt v3.2.2...

- Installed vmware/nsxt v3.2.2 (signed by a HashiCorp partner, key ID 6B6B0F38607A2264)

Partner and community providers are signed by their developers.

If you'd like to know more about provider signing, you can read about it here:

https://www.terraform.io/docs/cli/plugins/signing.html

Terraform has created a lock file .terraform.lock.hcl to record the provider

selections it made above. Include this file in your version control repository

so that Terraform can guarantee to make the same selections by default when

you run "terraform init" in the future.

Terraform has been successfully initialized!

You may now begin working with Terraform. Try running "terraform plan" to see

any changes that are required for your infrastructure. All Terraform commands

should now work.

If you ever set or change modules or backend configuration for Terraform,

rerun this command to reinitialize your working directory. If you forget, other

commands will detect it and remind you to do so if necessary.

4.Edit the provider.tf file

Change the host, the username and password according to your environment.

You need to either add the variable in the terraform.tfvars file or enter them when the prompt will ask you.

provider "nsxt" {

host = var.host

username = "admin"

password = var.password

}

5.Create the group and services

terraform plan -out plan.out

Terraform used the selected providers to generate the following execution plan. Resource actions are indicated with the following

symbols:

+ create

Terraform will perform the following actions:

# module.nsxt-tf-cm-grp.nsxt_policy_group.grp["NETMEMO-ESX"] will be created

+ resource "nsxt_policy_group" "grp" {

+ display_name = "NETMEMO-ESX"

+ domain = "default"

+ id = (known after apply)

+ nsx_id = (known after apply)

+ path = (known after apply)

+ revision = (known after apply)

+ criteria {

+ condition {

+ key = "Tag"

+ member_type = "VirtualMachine"

+ operator = "EQUALS"

+ value = "ESX"

}

+ condition {

+ key = "Tag"

+ member_type = "VirtualMachine"

+ operator = "EQUALS"

+ value = "VMWARE"

}

}

}

# module.nsxt-tf-cm-grp.nsxt_policy_group.grp["NETMEMO-LOCAL"] will be created

+ resource "nsxt_policy_group" "grp" {

+ display_name = "NETMEMO-LOCAL"

+ domain = "default"

+ id = (known after apply)

+ nsx_id = (known after apply)

+ path = (known after apply)

+ revision = (known after apply)

+ criteria {

+ ipaddress_expression {

+ ip_addresses = [

+ "10.0.0.0/25",

+ "10.1.1.0/25",

]

}

}

}

# module.nsxt-tf-cm-grp.nsxt_policy_group.grp["NETMEMO-NAS"] will be created

+ resource "nsxt_policy_group" "grp" {

+ display_name = "NETMEMO-NAS"

+ domain = "default"

+ id = (known after apply)

+ nsx_id = (known after apply)

+ path = (known after apply)

+ revision = (known after apply)

+ criteria {

+ condition {

+ key = "Tag"

+ member_type = "VirtualMachine"

+ operator = "EQUALS"

+ value = "NAS"

}

+ condition {

+ key = "Tag"

+ member_type = "VirtualMachine"

+ operator = "EQUALS"

+ value = "FILES"

}

}

}

# module.nsxt-tf-cm-svc.nsxt_policy_service.svc["NETMEMO-ESP"] will be created

+ resource "nsxt_policy_service" "svc" {

+ display_name = "NETMEMO-ESP"

+ id = (known after apply)

+ nsx_id = (known after apply)

+ path = (known after apply)

+ revision = (known after apply)

+ ip_protocol_entry {

+ protocol = 50

}

}

# module.nsxt-tf-cm-svc.nsxt_policy_service.svc["NETMEMO-NETBIOS"] will be created

+ resource "nsxt_policy_service" "svc" {

+ display_name = "NETMEMO-NETBIOS"

+ id = (known after apply)

+ nsx_id = (known after apply)

+ path = (known after apply)

+ revision = (known after apply)

+ l4_port_set_entry {

+ destination_ports = [

+ "135",

+ "137",

+ "139",

]

+ display_name = "TCP_NETMEMO-NETBIOS"

+ protocol = "TCP"

+ source_ports = []

}

+ l4_port_set_entry {

+ destination_ports = [

+ "135",

+ "137",

+ "139",

]

+ display_name = "UDP_NETMEMO-NETBIOS"

+ protocol = "UDP"

+ source_ports = []

}

}

Plan: 5 to add, 0 to change, 0 to destroy.

Changes to Outputs:

+ grp = {

+ NETMEMO-ESX = {

+ conjunction = []

+ criteria = [

+ {

+ condition = [

+ {

+ key = "Tag"

+ member_type = "VirtualMachine"

+ operator = "EQUALS"

+ value = "ESX"

},

+ {

+ key = "Tag"

+ member_type = "VirtualMachine"

+ operator = "EQUALS"

+ value = "VMWARE"

},

]

+ ipaddress_expression = []

+ macaddress_expression = []

+ path_expression = []

},

]

+ description = null

+ display_name = "NETMEMO-ESX"

+ domain = "default"

+ extended_criteria = []

+ id = (known after apply)

+ nsx_id = (known after apply)

+ path = (known after apply)

+ revision = (known after apply)

+ tag = []

}

+ NETMEMO-LOCAL = {

+ conjunction = []

+ criteria = [

+ {

+ condition = []

+ ipaddress_expression = [

+ {

+ ip_addresses = [

+ "10.0.0.0/25",

+ "10.1.1.0/25",

]

},

]

+ macaddress_expression = []

+ path_expression = []

},

]

+ description = null

+ display_name = "NETMEMO-LOCAL"

+ domain = "default"

+ extended_criteria = []

+ id = (known after apply)

+ nsx_id = (known after apply)

+ path = (known after apply)

+ revision = (known after apply)

+ tag = []

}

+ NETMEMO-NAS = {

+ conjunction = []

+ criteria = [

+ {

+ condition = [

+ {

+ key = "Tag"

+ member_type = "VirtualMachine"

+ operator = "EQUALS"

+ value = "NAS"

},

+ {

+ key = "Tag"

+ member_type = "VirtualMachine"

+ operator = "EQUALS"

+ value = "FILES"

},

]

+ ipaddress_expression = []

+ macaddress_expression = []

+ path_expression = []

},

]

+ description = null

+ display_name = "NETMEMO-NAS"

+ domain = "default"

+ extended_criteria = []

+ id = (known after apply)

+ nsx_id = (known after apply)

+ path = (known after apply)

+ revision = (known after apply)

+ tag = []

}

}

+ svc = {

+ NETMEMO-ESP = {

+ algorithm_entry = []

+ description = null

+ display_name = "NETMEMO-ESP"

+ ether_type_entry = []

+ icmp_entry = []

+ id = (known after apply)

+ igmp_entry = []

+ ip_protocol_entry = [

+ {

+ description = ""

+ display_name = ""

+ protocol = 50

},

]

+ l4_port_set_entry = []

+ nsx_id = (known after apply)

+ path = (known after apply)

+ revision = (known after apply)

+ tag = []

}

+ NETMEMO-NETBIOS = {

+ algorithm_entry = []

+ description = null

+ display_name = "NETMEMO-NETBIOS"

+ ether_type_entry = []

+ icmp_entry = []

+ id = (known after apply)

+ igmp_entry = []

+ ip_protocol_entry = []

+ l4_port_set_entry = [

+ {

+ description = ""

+ destination_ports = [

+ "135",

+ "137",

+ "139",

]

+ display_name = "TCP_NETMEMO-NETBIOS"

+ protocol = "TCP"

+ source_ports = []

},

+ {

+ description = ""

+ destination_ports = [

+ "135",

+ "137",

+ "139",

]

+ display_name = "UDP_NETMEMO-NETBIOS"

+ protocol = "UDP"

+ source_ports = []

},

]

+ nsx_id = (known after apply)

+ path = (known after apply)

+ revision = (known after apply)

+ tag = []

}

}

──────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────

Saved the plan to: plan.out

terraform apply plan.out

module.nsxt-tf-cm-svc.nsxt_policy_service.svc["NETMEMO-NETBIOS"]: Creating...

module.nsxt-tf-cm-grp.nsxt_policy_group.grp["NETMEMO-NAS"]: Creating...

module.nsxt-tf-cm-grp.nsxt_policy_group.grp["NETMEMO-LOCAL"]: Creating...

module.nsxt-tf-cm-grp.nsxt_policy_group.grp["NETMEMO-ESX"]: Creating...

module.nsxt-tf-cm-svc.nsxt_policy_service.svc["NETMEMO-ESP"]: Creating...

module.nsxt-tf-cm-svc.nsxt_policy_service.svc["NETMEMO-ESP"]: Creation complete after 0s [id=434fdc00-30e6-4072-a64b-a7e9534c80c2]

module.nsxt-tf-cm-svc.nsxt_policy_service.svc["NETMEMO-NETBIOS"]: Creation complete after 0s [id=59a29e53-9901-44d2-9589-f770c36d87bb]

module.nsxt-tf-cm-grp.nsxt_policy_group.grp["NETMEMO-ESX"]: Creation complete after 0s [id=0fb48626-d903-42cc-9513-b47e7626f44d]

module.nsxt-tf-cm-grp.nsxt_policy_group.grp["NETMEMO-LOCAL"]: Creation complete after 0s [id=21d93fff-c08c-42dd-90da-d099dfa51163]

module.nsxt-tf-cm-grp.nsxt_policy_group.grp["NETMEMO-NAS"]: Creation complete after 0s [id=bac7f9c7-d80c-4bfe-8663-d3c9f973e2fb]

Apply complete! Resources: 5 added, 0 changed, 0 destroyed.

...

6.Create the policies and rules

terraform plan -out plan.out

Terraform used the selected providers to generate the following execution plan. Resource actions are indicated with the following

symbols:

+ create

Terraform will perform the following actions:

# module.nsxt-tf-cm-dfw.nsxt_policy_security_policy.policies["NETMEMO-POL1"] will be created

+ resource "nsxt_policy_security_policy" "policies" {

+ category = "Application"

+ display_name = "NETMEMO-POL1"

+ domain = "default"

+ id = (known after apply)

+ locked = false

+ nsx_id = (known after apply)

+ path = (known after apply)

+ revision = (known after apply)

+ sequence_number = 10

+ stateful = true

+ tcp_strict = (known after apply)

+ rule {

+ action = "ALLOW"

+ destination_groups = [

+ "/infra/domains/default/groups/bac7f9c7-d80c-4bfe-8663-d3c9f973e2fb",

]

+ destinations_excluded = false

+ direction = "IN_OUT"

+ disabled = false

+ display_name = "NETMEMO-NAS-R"

+ ip_version = "IPV4_IPV6"

+ logged = false

+ nsx_id = (known after apply)

+ revision = (known after apply)

+ rule_id = (known after apply)

+ scope = [

+ "/infra/domains/default/groups/bac7f9c7-d80c-4bfe-8663-d3c9f973e2fb",

]

+ sequence_number = (known after apply)

+ services = [

+ "/infra/services/HTTPS",

]

+ source_groups = [

+ "/infra/domains/default/groups/bac7f9c7-d80c-4bfe-8663-d3c9f973e2fb",

]

+ sources_excluded = false

}

+ rule {

+ action = "ALLOW"

+ destination_groups = [

+ "/infra/domains/default/groups/0fb48626-d903-42cc-9513-b47e7626f44d",

]

+ destinations_excluded = false

+ direction = "IN_OUT"

+ disabled = false

+ display_name = "NETMEMO-ESX-R"

+ ip_version = "IPV4_IPV6"

+ logged = false

+ nsx_id = (known after apply)

+ revision = (known after apply)

+ rule_id = (known after apply)

+ scope = [

+ "/infra/domains/default/groups/0fb48626-d903-42cc-9513-b47e7626f44d",

]

+ sequence_number = (known after apply)

+ services = [

+ "/infra/services/HTTPS",

]

+ source_groups = [

+ "/infra/domains/default/groups/0fb48626-d903-42cc-9513-b47e7626f44d",

]

+ sources_excluded = false

}

}

# module.nsxt-tf-cm-dfw.nsxt_policy_security_policy.policies["NETMEMO-POL2"] will be created

+ resource "nsxt_policy_security_policy" "policies" {

+ category = "Application"

+ display_name = "NETMEMO-POL2"

+ domain = "default"

+ id = (known after apply)

+ locked = false

+ nsx_id = (known after apply)

+ path = (known after apply)

+ revision = (known after apply)

+ sequence_number = 20

+ stateful = true

+ tcp_strict = (known after apply)

+ rule {

+ action = "ALLOW"

+ destination_groups = [

+ "/infra/domains/default/groups/0fb48626-d903-42cc-9513-b47e7626f44d",

+ "/infra/domains/default/groups/bac7f9c7-d80c-4bfe-8663-d3c9f973e2fb",

]

+ destinations_excluded = false

+ direction = "IN_OUT"

+ disabled = false

+ display_name = "NETMEMO-LOCAL-R"

+ ip_version = "IPV4_IPV6"

+ logged = false

+ nsx_id = (known after apply)

+ revision = (known after apply)

+ rule_id = (known after apply)

+ scope = [

+ "/infra/domains/default/groups/0fb48626-d903-42cc-9513-b47e7626f44d",

+ "/infra/domains/default/groups/bac7f9c7-d80c-4bfe-8663-d3c9f973e2fb",

]

+ sequence_number = (known after apply)

+ services = [

+ "/infra/services/59a29e53-9901-44d2-9589-f770c36d87bb",

+ "/infra/services/HTTPS",

]

+ source_groups = [

+ "/infra/domains/default/groups/21d93fff-c08c-42dd-90da-d099dfa51163",

]

+ sources_excluded = false

}

}

Plan: 2 to add, 0 to change, 0 to destroy.

terraform apply plan.out

module.nsxt-tf-cm-dfw.nsxt_policy_security_policy.policies["NETMEMO-POL1"]: Creating...

module.nsxt-tf-cm-dfw.nsxt_policy_security_policy.policies["NETMEMO-POL2"]: Creating...

module.nsxt-tf-cm-dfw.nsxt_policy_security_policy.policies["NETMEMO-POL2"]: Creation complete after 1s [id=bab4c14e-30c6-4633-b9d7-db760844e0e7]

module.nsxt-tf-cm-dfw.nsxt_policy_security_policy.policies["NETMEMO-POL1"]: Creation complete after 1s [id=867b5893-15e3-4dab-a2f0-c8084af790a7]

Apply complete! Resources: 2 added, 0 changed, 0 destroyed.